Introducing Onoma: One AI Memory Across Every Provider

The AI memory layer that works across every provider. 14 models. 7 providers. One memory. Your context finally goes where you go.

ChatGPT remembers you now. So does Claude. Gemini too.

The problem? Those memories don't talk to each other.

Every AI platform has rushed to add memory features. OpenAI stores your conversation history and preferences. Anthropic's Claude just launched memory that syncs across your sessions. Google's Gemini remembers your patterns.

And every one of them locks that memory inside their walls.

Switch from ChatGPT to Claude for a writing task? Start over. Try Gemini because you need its Google integration? Rebuild your context from scratch. Use multiple AI tools because different models excel at different things? Maintain separate, fragmented identities across every platform.

This is platform lock-in disguised as a feature.

Today, we're launching Onoma: the AI memory layer that works across every provider.

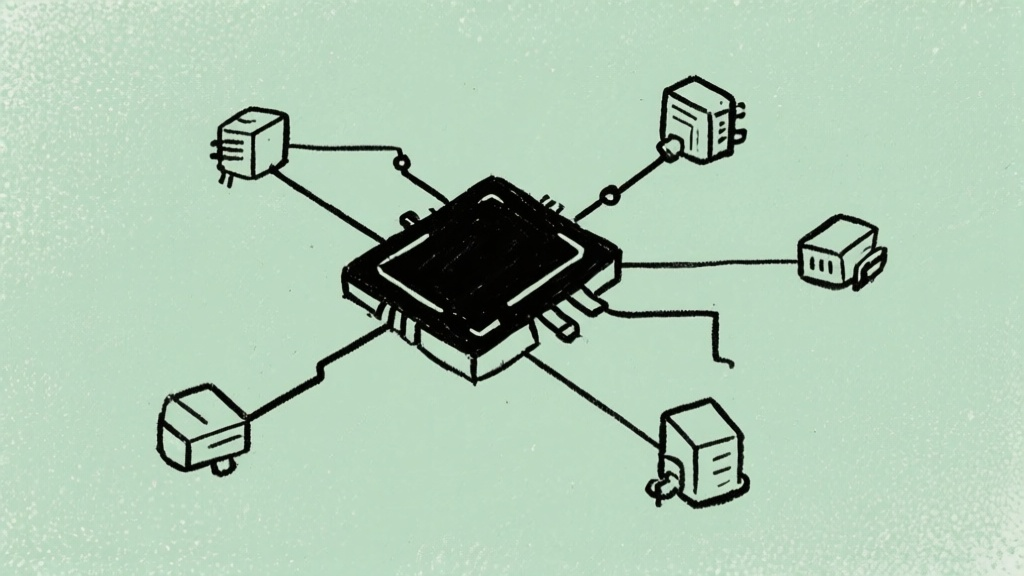

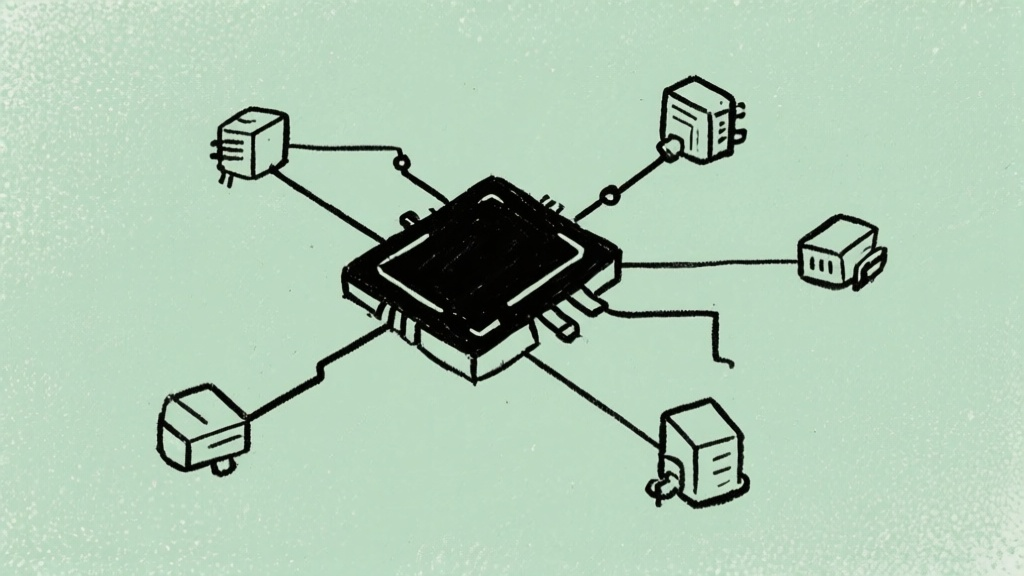

One memory, every AI

Onoma sits between you and all your AI providers. When you talk to Claude, Onoma remembers. Switch to GPT-4 mid-conversation, and that context comes with you. Compare Gemini's answer to Mistral's, both with full knowledge of what you're working on.

14 models from 7 providers: OpenAI, Anthropic, Google, xAI, Groq, Mistral, and more. One unified memory across all of them.

This isn't about replacing your favorite AI. It's about using the right model for each task without losing everything you've built. Claude for nuanced writing. GPT-4 for complex coding. Gemini for research with current information. Each with complete context.

Your AI memory finally goes where you go.

What we actually built

Spaces: automatic organization

Your work context shouldn't mix with personal projects. Onoma's Spaces automatically organize your conversations by topic. Work stays separate from personal. Client projects stay distinct from hobby explorations. No folders to manage, no tags to apply.

Context surfaces when relevant. Stays hidden when not.

Adaptive routing

Not sure which model handles your question best? Onoma can decide automatically. Ask something that needs careful reasoning, it routes to Claude. Quick factual lookup, it sends to a fast model. Complex code generation, it picks the right tool.

Or ignore routing and choose yourself. Compare responses side by side and pick the best answer.

Cortex: privacy without theater

Here's an uncomfortable truth we acknowledge: convenience wins over privacy. Every time. People will share sensitive information with AI because the benefits are too valuable to resist.

So instead of privacy theater, we built actual control.

Cortex processes personally identifiable information locally on your device before anything reaches AI providers. EU data residency keeps your context in European infrastructure. GDPR-compliant by design.

Want maximum protection? Enable Cortex and your PII never leaves your machine. Comfortable with standard cloud AI? Use it normally. The choice is yours, not ours.

Full visibility and control

Native AI memory is a black box. ChatGPT shows you some of what it remembers, but you can't see the underlying structure. Claude's memory triggers invisibly. You're trusting platforms with context you can't fully inspect.

Onoma is transparent by design.

See exactly what's stored. Edit specific memories. Delete anything you want. Export everything in standard formats. Your context belongs to you, and you can see every piece of it.

This matters because data portability isn't just about switching providers. It's about knowing what AI systems know about you.

Why now

The AI memory wars have begun. Every major platform is building lock-in through memory features. OpenAI automatically references your entire chat history across sessions. Anthropic just rolled out memory that creates separate contexts per project. Google integrates Gemini memory across Workspace.

Each company wants to be the single platform where your AI context lives. And once that context is deep enough, switching becomes unthinkable.

We think this is backwards.

The best model for writing isn't always the best for coding. The fastest model isn't always the most thoughtful. Different tasks genuinely benefit from different AI systems.

The future isn't one AI to rule them all. It's using the right AI for each task, with context that follows you everywhere.

Onoma makes that future practical today.

What this means for you

If you use multiple AI tools and you're tired of rebuilding context every time you switch, Onoma solves that problem immediately. Build context in any conversation, use it in all of them.

If you've wondered what ChatGPT or Claude actually knows about you, Onoma gives you complete visibility. No more wondering what's stored behind closed APIs.

If you want the flexibility to try new AI models without starting over, Onoma removes that friction entirely. Switch providers mid-thought if you want.

If you need European data residency or local PII protection, Cortex provides that layer without sacrificing the convenience of modern AI assistants.

Pricing that makes sense

Free tier: 50,000 tokens per month across 8 AI models. Three Spaces for organization. Everything you need to experience cross-platform memory.

Ambassador plan: 9 euros per month for unlimited tokens across all 14+ models. Unlimited Spaces. Full Cortex privacy features. Complete data export.

No per-query charges. No token games. One simple price for unlimited access to the best AI models with unified memory.

The bigger picture

AI assistants are becoming central to how knowledge workers think, write, and create. The companies building these tools know it. That's why they're racing to lock in your context before you can take it elsewhere.

We're building the alternative: a memory layer that belongs to you, works everywhere, and doesn't trap you inside any single platform.

Platform lock-in is the new vendor lock-in. Your AI context is too valuable to be trapped inside any company's walled garden.

Take it with you.

Get started

Onoma is available now. Create your free account at askonoma.com and connect your first AI provider in under a minute.

See how Onoma works or explore the full feature set.

14 models. 7 providers. One memory. Your context, everywhere.

Onoma is built in Europe with EU data residency by default. Questions? Contact us.